!pip install graphviz

!pip install fastbook

!pip install fastaiWhat is Machine Learning

from fastbook import *Traditional Programming

gv('''program[shape=box3d]

inputs->program->results''')Program:

- if else

- loop

- function

- etc

We transfer our knowledge to the computer.

AI vs ML vs DL

Artificial Intelligence

Artificial Intelligence (AI) is a branch of computer science that aims to create systems capable of performing tasks that would normally require human intelligence. These tasks include problem solving, understanding language, recognizing patterns, learning from experience, and making decisions.

Non-machine learning AIs requires human to program the rules (teach the computer how to do it).

Some example of non-machine learning AIs:

Rule-base System

def predict_illness(symptoms: list[str]) -> str:

if 'fever' in symptoms:

if 'cough' in symptoms:

return 'flu'

elif' sore throat' in symptoms:

return 'cold'

elif 'vomitting' in symptoms:

return 'food poisoning'

else:

return 'unknown'

elif 'rash' in symptoms:

return 'measles'

else:

return 'unknown'print(predict_illness(['fever', 'cough']))

print(predict_illness(['fever', 'vomitting']))flu

food poisoningConstraint Satisfaction Problem

def is_valid(board, row, col):

# Check if there is a queen in the same row

for i in range(col):

if board[row][i] == 1:

return False

# Check if there is a queen in the upper diagonal

for i, j in zip(range(row, -1, -1), range(col, -1, -1)):

if board[i][j] == 1:

return False

# Check if there is a queen in the lower diagonal

for i, j in zip(range(row, len(board)), range(col, -1, -1)):

if board[i][j] == 1:

return False

return True

def solve_queen(board, col):

# Base case: all queens are placed

if col == len(board):

print_board(board)

return True

# Recursive case: try to place a queen in each row of the current column

for i in range(len(board)):

if is_valid(board, i, col):

board[i][col] = 1

solve_queen(board, col + 1)

board[i][col] = 0

return False

def print_board(board):

for row in board:

for cell in row:

if cell == 1:

print("Q", end=" ")

else:

print(".", end=" ")

print()

print()

# Initialize the board

n = 4

board = [[0] * n for _ in range(n)]

# Solve the eight queen problem

solve_queen(board, 0). . Q .

Q . . .

. . . Q

. Q . .

. Q . .

. . . Q

Q . . .

. . Q .

FalseMachine Learning

Machine Learning algorithms are able to learn without being explicitly programmed.

Instead of writing the rules, we give the computer the data and let the computer learn the rules by itself.

Describe to me - in words - how to recognize apple?

How about this, is it an apple?

Human learns by examples. We learn to recognize apple by seeing a lot of apple. We learn to recognize cherry by seeing a lot of cherry.

Machine Learning consists of two stages:

Training Stage

We only give the computer the data and the expected output. The computer will learn the rules by itself. The output is the model.

gv('''training[shape=box3d]

model[shape=box3d]

inputs->training->model

results->training''')Inference Stage

Once the model is created, we can use the model to predict the output for new data.

gv('''

model[shape=box3d]

inputs -> model -> results

''')Let’s see an example in action.

Decision Tree

gv('''

node [shape=box]

inputs -> fever [label="symptoms"]

fever -> cough [label="yes"]

cough -> sore_throat [label="no"]

cough -> flu [label="yes"]

cough -> unknown [label="no"]

sore_throat -> vomitting [label="yes"]

sore_throat -> rash [label="no"]

vomitting -> food_poisoning [label="yes"]

vomitting -> unknown [label="no"]

rash -> measles [label="yes"]

rash -> unknown [label="no"]

''') Differs to the traditional programming, we don’t need to write the rules. We only need to give the computer the data and the expected output. The computer will build the decision tree by itself.

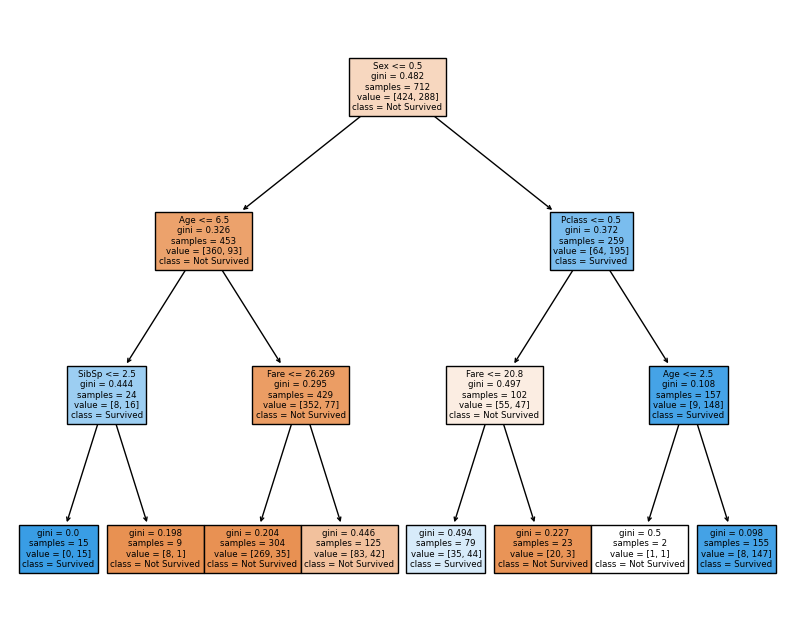

Let’s try to build decision tree to predict whether a titanic passenger survived or not.

from sklearn.tree import DecisionTreeClassifier, plot_tree

import matplotlib.pyplot as plt

import pandas as pd

# Load the data

url = 'https://raw.githubusercontent.com/datasciencedojo/datasets/master/titanic.csv'

df = pd.read_csv(url)

df.head()| PassengerId | Survived | Pclass | Name | Sex | Age | SibSp | Parch | Ticket | Fare | Cabin | Embarked | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | 0 | 3 | Braund, Mr. Owen Harris | male | 22.0 | 1 | 0 | A/5 21171 | 7.2500 | NaN | S |

| 1 | 2 | 1 | 1 | Cumings, Mrs. John Bradley (Florence Briggs Th... | female | 38.0 | 1 | 0 | PC 17599 | 71.2833 | C85 | C |

| 2 | 3 | 1 | 3 | Heikkinen, Miss. Laina | female | 26.0 | 0 | 0 | STON/O2. 3101282 | 7.9250 | NaN | S |

| 3 | 4 | 1 | 1 | Futrelle, Mrs. Jacques Heath (Lily May Peel) | female | 35.0 | 1 | 0 | 113803 | 53.1000 | C123 | S |

| 4 | 5 | 0 | 3 | Allen, Mr. William Henry | male | 35.0 | 0 | 0 | 373450 | 8.0500 | NaN | S |

Let’s preprocess the data first.

# Define the dependent variable

dep_var = 'Survived'

# Define the categorical and continuous variables

cat_names = ['Pclass', 'Sex', 'Embarked']

cont_names = ['Age', 'SibSp', 'Parch', 'Fare']

# Preprocess the data

df = df[cat_names + cont_names + [dep_var]].dropna()

df[cat_names] = df[cat_names].apply(lambda x: pd.factorize(x)[0])

X = df[cat_names + cont_names]

y = df[dep_var]X.head()| Pclass | Sex | Embarked | Age | SibSp | Parch | Fare | |

|---|---|---|---|---|---|---|---|

| 0 | 0 | 0 | 0 | 22.0 | 1 | 0 | 7.2500 |

| 1 | 1 | 1 | 1 | 38.0 | 1 | 0 | 71.2833 |

| 2 | 0 | 1 | 0 | 26.0 | 0 | 0 | 7.9250 |

| 3 | 1 | 1 | 0 | 35.0 | 1 | 0 | 53.1000 |

| 4 | 0 | 0 | 0 | 35.0 | 0 | 0 | 8.0500 |

y.head() 0 0

1 1

2 1

3 1

4 0

Name: Survived, dtype: int64# Create the decision tree model

tree = DecisionTreeClassifier(max_depth=3)

tree.fit(X, y)

# Print the decision tree

plt.figure(figsize=(10, 8))

plot_tree(tree, feature_names=cat_names+cont_names, class_names=['Not Survived', 'Survived'], filled=True)

plt.show()

# Use the tree to infer

tree.predict([[1, 1, 0, 35.0, 1, 0, 52.1000]])/Users/ruangguru/.pyenv/versions/3.11.1/lib/python3.11/site-packages/sklearn/base.py:439: UserWarning: X does not have valid feature names, but DecisionTreeClassifier was fitted with feature names

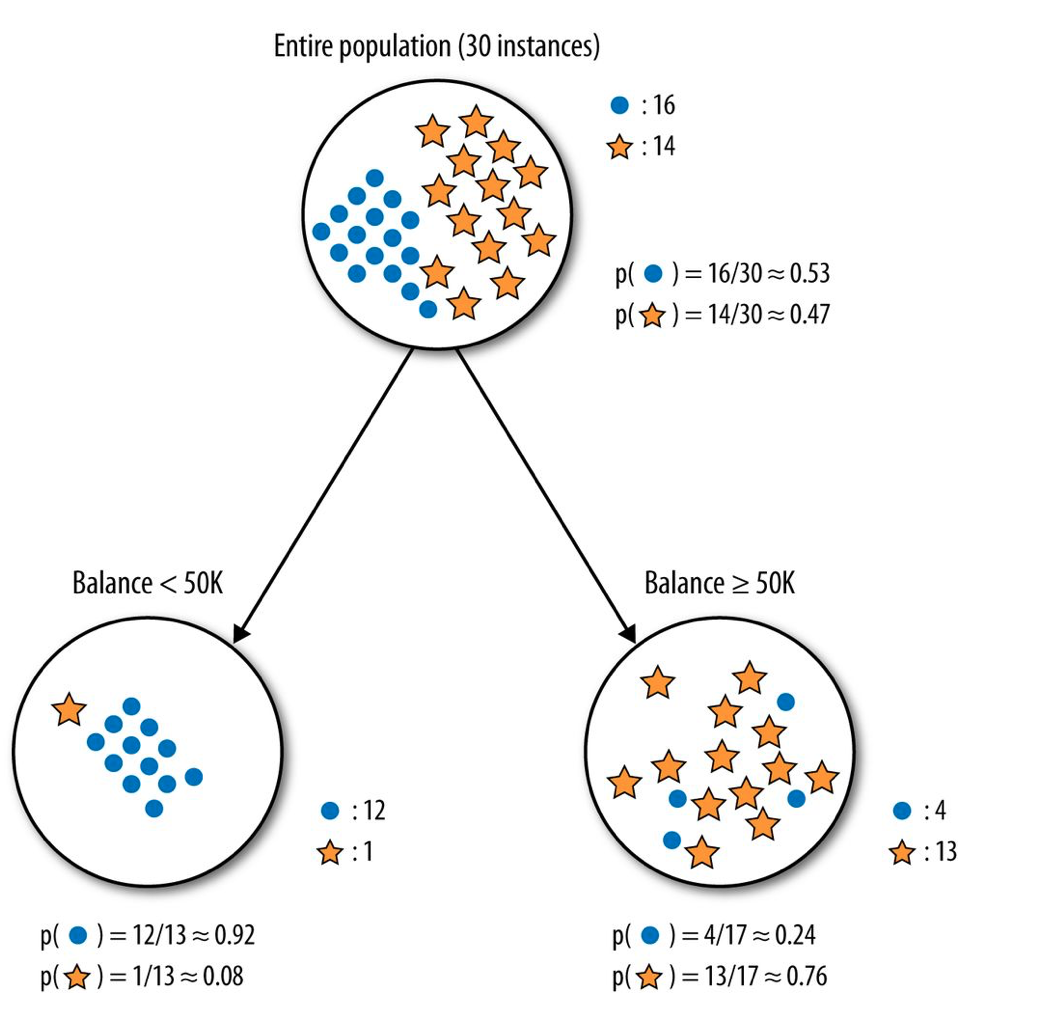

warnings.warn(array([1])What is Gini?

Gini is a measure of impurity. The lower the gini, the purer the node.

\[Gini = 1 - \sum_{i=1}^{n} p_i^2\]

Source: Learndatasci.com

Depends on how we split the data, we can get different purity.

Source: ekamperi.github.io

Deep Learning

Deep Learning is a subset of Machine Learning.

Deep Learning was inspired by the structure and function of the brain, namely the interconnecting of many neurons.

However, as the field of AI has grown, and the intricacies of the human brain have been studied more, the inspiration has shifted more towards inspiration by the brain rather than duplication of it.

ANNs frequently outperform other ML techniques on very large and complex problems