!pip install nltk

!pip install transformersPreprocessing

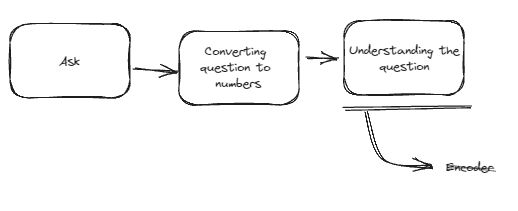

Overview for our first architecture - NLP Without Neural Network

For our first architecture we’ll learn how to do sentiment classification using “Naive Bayes”. We’ll delve in several key concepts of NLP that will help us later understand more complex architecture like Seq2Seq and Transformers such as Preprocessing, Word Embedding, tokenization, and more!

As you can see on above diagram, we’ll learn the steps of asking, converting that question to numbers, and then making sure our model understand that question. For modern architecture the concept of understanding is mostly using an encoder layer, but for methods like “Naive Bayes” it’s kinda like encoder layer, but much more traditional than that.

Note: “Naive Bayes” method will be covered in the next lesson. Look forward to it!

Text classification

One of the task that we can use for NLP without neural network is “Text classification”. This task is as simple as it sound: What current input should be classified to?

For today we’ll learn how to classify a tweet if it can be count as positive tweet or negative.

Imagine this tweet:

I’m really excited towards tomorrow for our shop opening, see you guys at xxx!

We as a human can know from above tweet that the person who tweeted currently being positive (being excited, being happy), and so the conclusion is that above tweet is considered as a “positive tweet”.

So in our first architecture we’ll learn how we can conclude a tweet is either positive or negative by checking every word and see if there are any hints that tweet have either positive, or negative sentiment. For above tweet the hint would be the word “Excited”.

Dataset

For our learning to classify tweets into it’s sentiment, we will use this dataset https://www.kaggle.com/datasets/ferno2/training1600000processednoemoticoncsv. It’s a dataset of 1,6 million of tweets that’s already classified as either positive tweet for negative tweet

Preprocessing - Cleaning noises, and consolidating words - Human part before we input to the machine

One of the place where human can “help” the machine learning model is in preprocessing. One of the task that are done in preprocessing is to make sure that our model won’t be distracted by several things that we as a human might figure out that the model shouldn’t care about, and transform several things to make sure our model can works better.

When we’re working on classification (especially when working with model but without neural network), we should consider our goal when we’re looking at our dataset: What words do our model really need to consider when classifying our data.

As for NLP using something like naive bayes mostly depends on understanding if a tweet contain certain words that can help it’s understanding if the tweet is either positive or negative. So there are basicallly two things that we should do before feeding our input to our model:

Removing noises, which is words or characters that shouldn’t give any effect in our classification tasks.

Example: 😃 Super excited to share my latest article! @OpenAI 👀👉 http://ai.newpost.com #AI #OpenAI 😎

If we’re currently doing sentiment classification we might not need to include urls, mentions, hashtags, etc. If we include those into our model, our model might hint those noises as something that geared the tweet sentiment towards either positive or negative.

Another example for sentiment classification tasks is removing stopwords. Stopwords are words that occur so frequently in sentences that they contain little meaningful information. Examples of common stopwords in the English language include: “the”, “is”, “at”, “which”, “on”.

Other things that we might considering removing is symbols like “?”, “!”, etc.as -at least when we’re not using neural network- understanding sentiment from symbols might be cout

Consolidating words that have similar meaning, by removing their tenses, plurality, prefix, suffix, etc.

Words like “Exciting” is consolidated with “excited”, “excitement”, “excite”, etc. so we can consider words that have the same root (“Exciting”, “Excited”, “Excite”, are have the same root word: “Excite”) to be processed together.

Another thing we might consider is to lowercasing so “Exciting”, “exciting”, and “EXCITING” can be considered the same so our model won’t differentiate between those three words when learning the sentiment.

Let’s remove all noises

# @title Remove noises

import re

import string

def remove_urls(text):

url_pattern = re.compile(r'https?://\S+|www\.\S+')

return url_pattern.sub(r'', text)

def remove_hashtags(text):

hashtag_pattern = re.compile(r'#\S+')

return hashtag_pattern.sub(r'', text)

def remove_mentions(text):

mention_pattern = re.compile(r'@\S+')

return mention_pattern.sub(r'', text)

def remove_emojis(text):

emoji_pattern = re.compile("["

u"\U0001F600-\U0001F64F" # emoticons

u"\U0001F300-\U0001F5FF" # symbols & pictographs

u"\U0001F680-\U0001F6FF" # transport & map symbols

u"\U0001F1E0-\U0001F1FF" # flags (iOS)

u"\U00002702-\U000027B0"

u"\U000024C2-\U0001F251"

"]+", flags=re.UNICODE)

return emoji_pattern.sub(r'', text)

def remove_symbols(text):

return text.translate(str.maketrans('', '', string.punctuation))

def preprocess_sentence(text):

text = remove_urls(text)

text = remove_hashtags(text)

text = remove_mentions(text)

text = remove_emojis(text)

text = remove_symbols(text) # Remove punctuation

return text

# Example usage:

text = "Hey @user, check out the webpage: https://example.com. I found it awesome! 😎 #exciting" # @param {text: "string"}

print(preprocess_sentence(text))Hey check out the webpage I found it awesome Stemming and lemmatization

When consolidating words that have the same root, there are two strategies that can be used: Stemming and Lemmatization.

Stemming

Exciting, excited. Happy, happiness. Sad, sadden, sadness. Worrying, worried, worry.

The way stemming handle words consolidating is by removing the suffixes (and sometimes prefixes) of the words, leaving only the word “stem” (the part of the word that is common to all its inflected variants). It’s easier to learn by example:

exciting -> excit

The unique thing about stemming is that it reduce to several characters that are unique to other words, but sometimes it doesn’t really “make sense” in the meaning of the word. As long as it can manage to group several pattern of the same words as one, lots of task can be enough to use this.

went != go

It’s only caring about reducing the words to the most basic letter that unique from other words, not caring to their synonyms, tenses, or likewise. For example “went” and “go” would be different in stem even though “went” is just a past tense of “go”

Lemmatization

Lemmatization is different from stemming such that it emphasizes a heavy consideration for grammar rules in its approach. While both methodologies aim to reduce words to their base or root form, lemmatization performs this task by taking into account the morphological analysis of the words. This means that it understands the context and proper grammatical elements such as verb tenses, plural forms, and even gender to extract the correct linguistic base form of a word, known as ‘lemma’.

Better -> Good. Geese -> goose. Went -> Go

As we can see from above examples, lemmatization profoundly recognizes and accurately transforms words into their dictionary or base form, considering their tenses, their plurality, and more.

This can’t be achieved with stemming as stemming is merely “Chopping off” words rather than considering dictionary at all.

Quick library note: NLTK

Going forward, we’ll use NLTK a lot. NLTK is short for Natural Language Toolkit, a python library that has a lot of functionality to work with NLP in Python. You can use this library for lots of thing such as removing stopwords, tokenizing, stemming, lemmatizing, and more. You can learn more on https://www.nltk.org/ and check what capabilities that this library has by checking https://www.nltk.org/py-modindex.html .

Stemming in practice

#@title Stemming

# Import the necessary libraries

from nltk.stem import PorterStemmer

from nltk.tokenize import word_tokenize

import nltk

# Download required datasets from nltk

nltk.download('punkt')

stemmer = PorterStemmer()

text = "The striped bats were hanging on their feet and eating best batches of juicy leeches" #@param {type: "string"}

# Tokenize the text

token_list = word_tokenize(text)

# Apply stemming on the tokens

stemmed_output = ' '.join([stemmer.stem(token) for token in token_list])

print(text)

print(stemmed_output)[nltk_data] Downloading package punkt to /root/nltk_data...

[nltk_data] Unzipping tokenizers/punkt.zip.the stripe bat were hang on their feet and eat best batch of juici leechLemmatization In Practice

The process of lemmatization is a little bit more complex than stemming because we need every words “POS tag” to make sure that the lemmatization lemmatize to the correct part of speech.

POS (Part of speech)

Part of speech is as simple as asking to each words: Is it a noun? Is it a verb? Is it an adjective? Etc. This helps in making sure that every word converted to the correct lemma.

Of course, different from stemming, for lemmatization to work correctly we must ensure that our input still contains stopwords to ensure the POS is correct. So if you want to do lemmatization ensure that POS is done before removing all stopwords, or removing any words at all.

Below is the code for lemmatization, feel free to change the input text to any sentence that you want to see lemmatization on play.

#@title POS

# Import the necessary libraries

import nltk

from nltk.tokenize import word_tokenize

from nltk import pos_tag

from nltk.corpus import wordnet

# Download required datasets from nltk

nltk.download('averaged_perceptron_tagger')

nltk.download('punkt')

def get_human_readable_pos(treebank_tag):

"""Map `treebank_tag` to equivalent human readable POS tag."""

if treebank_tag.startswith('J'):

return "Adjective"

elif treebank_tag.startswith('V'):

return "Verb"

elif treebank_tag.startswith('N'):

return "Noun"

elif treebank_tag.startswith('R'):

return "Adverb"

else:

return "Others"

text = "The striped bats were hanging on their feet and eating best batches of juicy leeches" # @param {text: "string"}

# Tokenize the text

token_list = word_tokenize(text)

# POS tagging on the tokens

pos_tokens = pos_tag(token_list)

# Print word with its POS tag

for word, pos in pos_tokens:

print(f"{word} : {get_human_readable_pos(pos)}")The : Others

striped : Adjective

bats : Noun

were : Verb

hanging : Verb

on : Others

their : Others

feet : Noun

and : Others

eating : Verb

best : Adjective

batches : Noun

of : Others

juicy : Noun

leeches : Noun[nltk_data] Downloading package averaged_perceptron_tagger to

[nltk_data] /root/nltk_data...

[nltk_data] Package averaged_perceptron_tagger is already up-to-

[nltk_data] date!

[nltk_data] Downloading package punkt to /root/nltk_data...

[nltk_data] Package punkt is already up-to-date!Let’s lemmatize

Now after POS tagging are done, we can pass the POS tagging along with every words to our lemmatization function.

#@title Lemmatization

# Import the necessary libraries

from nltk.stem import WordNetLemmatizer

from nltk.tokenize import word_tokenize

from nltk.corpus import wordnet

from nltk import pos_tag

import nltk

# Download required datasets from nltk

nltk.download('averaged_perceptron_tagger')

nltk.download('wordnet')

nltk.download('punkt')

def get_wordnet_pos(treebank_tag):

"""Map `treebank_tag` to equivalent WordNet POS tag."""

if treebank_tag.startswith('J'):

return wordnet.ADJ

elif treebank_tag.startswith('V'):

return wordnet.VERB

elif treebank_tag.startswith('N'):

return wordnet.NOUN

elif treebank_tag.startswith('R'):

return wordnet.ADV

else:

# As default pos in lemmatization is Noun

return wordnet.NOUN

lemmatizer = WordNetLemmatizer()

text = "The striped bats were hanging on their feet and eating best batches of juicy leeches" #@param {text: "string"}

# Tokenize the text

token_list = word_tokenize(text)

# POS tagging on the tokens

pos_tokens = pos_tag(token_list)

# Lemmatize with POS tagging

lemmatized_output = ' '.join([lemmatizer.lemmatize(token, get_wordnet_pos(pos)) for token, pos in pos_tokens])

print(lemmatized_output)[nltk_data] Downloading package averaged_perceptron_tagger to

[nltk_data] /root/nltk_data...

[nltk_data] Package averaged_perceptron_tagger is already up-to-

[nltk_data] date!

[nltk_data] Downloading package wordnet to /root/nltk_data...

[nltk_data] Downloading package punkt to /root/nltk_data...

[nltk_data] Package punkt is already up-to-date!The striped bat be hang on their foot and eat best batch of juicy leechTokenization

Tokenization is one of the latest part of preprocessing in NLP. The definition is simple: It’s a process to breakdown our preprocessed words into array of features that already preprocessed so we can feed it to our process.

Why we called it features? For our current architecture, a feature is basically a single pre-processed word. But later when we’re using neural networks, a feature might be refer to sub-words.

Words such as “eating”, when we tokenized into sub-words, might be tokenized into something like “eat-ing”. But sub-words as features mostly held place when we need semantic relation between words, but for learning how NLP works without neural network it’s basically harder and mostly we can just refer to neural network for tasks that require these.

# @title Basic tokenization

# Import required library

from nltk.tokenize import word_tokenize

import nltk

nltk.download('punkt')

# Sample text

text = "This is an example sentence for basic tokenization." #@param {text:"string"}

# Tokenize the text

tokens = word_tokenize(text)

# Output the tokens

print(tokens)[nltk_data] Downloading package punkt to /root/nltk_data...

[nltk_data] Unzipping tokenizers/punkt.zip.['This', 'is', 'an', 'example', 'sentence', 'for', 'basic', 'tokenization', '.']# @title Sub-words tokenizer that is used by BERT model

# Import required library

from transformers import BertTokenizer

# Initialize the tokenizer with a pretrained model

tokenizer = BertTokenizer.from_pretrained('bert-base-uncased')

# Sample text

text = "The striped bats were hanging on their feet and eating best batches of juicy leeches" #@param

# Tokenize the text

tokens = tokenizer.tokenize(text)

# Output the tokens

print(tokens)['the', 'striped', 'bats', 'were', 'hanging', 'on', 'their', 'feet', 'and', 'eating', 'best', 'batch', '##es', 'of', 'juicy', 'lee', '##ches']As you can see above that some of the word is splitted to sub-words: “batch + ##es” and “lee + ##ches”. What to split to subwords is depend on the task at hand of course, and for BERT cases, lot’s of verb still considered a single token rather that splitting it.

How our model will understand which sentiment to assign our tweet to?

Let’s get a while back and try to understand below tweet:

I’m really excited towards tomorrow for our shop opening, see you guys at xxx!

How can we conclude that tweet is positive again? It’s because it’s having the word excited, as we know that the word excited are more likely hinting to a sentence that is positive, but is unlikely to be existing on a sentence that is negative.

So how can a model know, especially when we’re not doing deep-learning, how to differentiate a sentiment of a tweet? By checking if a sentence containing words that give hint towards one of the sentiment

Excited are unlikely to be occuring on negative tweet

So how can we teach a machine that certain words should give a great hint that a tweet is positive while certain words can give a great hint for otherwise?

We can of course just feed “excited”, “happy”, “sad”, etc, then tag them to be one way or another, but imagine if we don’t have the dictionary for all positive words and negative words, how can we compile them?

“Excited are unlikely to be occuring on negative tweet”

So if we can gather lots of tweets that already tagged as positive and negative, we can compile every word that are positive by checking all positive tweets and if there are lots of tweets that hinting that this word is positive.

“Word like ‘technology’ can be occuring on positive and negative tweet, and shouldn’t affect a sentiment”

But don’t forget that some words are neutral. It depends on your dataset, but let’s say if we’re scraping tweets from tech reviewer, the word “technology” would appear on positive sentiment, while still of course shown on negative sentiment.

So our first formula might be: - If a word often shown on a sentiment, it might be hinting that it’s classify as that sentiment - But if a word geared towards both sentiment, it most likely hinting that it’s a neutral word

Above concept will be our baseline to understand two methods of Feature Extraction: Bag-of-words, and TF-IDF, which we’ll learn in our next session!

Isn’t NLP exciting?

There are lots of challenge when it comes to a task as simple as sentiment analysis:

- I’m not really interested thanks!

For sentence like that, we have to make sure that our model knowing to use that “not” and negate anything after

- Wow, that was so interesting that I fell asleep in the mid of the event

For sarcasm, it’s a whole another level. How to solve something like that? How a model can know which sentence is sarcasm, and which are not?

Of course we won’t give you the answer right away 😛, stay tune and stay curious!