!nvidia-smiStable Diffusion - Basic

[ ! Attention ] It’s crucial to verify the license of the models, particularly if there’s an intention to use the obtained results for commercial purposes.

It’s essential to utilize these models responsibly and ethically. They should not be employed to create or disseminate illegal or harmful content. This includes, but is not limited to, content that is violent, hateful, sexually explicit, or infringes on someone’s privacy or rights.

As a user, the rights to the outputs generated using the model are retained. However, accountability for how these outputs are used also lies with the user. They should not be used in a manner that breaches the terms of the license or any applicable laws or regulations.

The model can be used commercially or as a service, and the weights can be redistributed. However, if this is done, the same use restrictions as those in the original license must be included. A copy of the CreativeML OpenRAIL-M license must also be provided to all users.

(Licence of v1.4 e v1.5 https://huggingface.co/spaces/CompVis/stable-diffusion-license)

With that out of the way, let’s try out various things we can do with Stable Diffusion. Let’s get started!

Note: - Some images when re-run will not be the same, even with the same seed. - Stable Diffusion is resource intensive in terms of need for GPU and large hard disk space, we may need to “disconnect and delete the runtime” and continue halfway through this notebook.

Installing the libraries

- Install the necessary libraries for stable diffusion

- xformers for memory optimization

!pip install diffusers==0.11.1

!pip install -q accelerate transformers ftfy bitsandbytes==0.35.0 gradio natsort safetensors xformersPipeline for image generation

- We can define with little effort a pipeline to use the Stable Diffusion model, through the StableDiffusionPipeline

import torch #PyTorch

from diffusers import StableDiffusionPipelinepipe = StableDiffusionPipeline.from_pretrained("CompVis/stable-diffusion-v1-4", torch_dtype=torch.float16)pipe = pipe.to('cuda') #We'll always use GPU, make sure your change your runtime to use GPU is you're on Collabpipe.enable_attention_slicing()

pipe.enable_xformers_memory_efficient_attention()Sometime during image generation, the image may come out all black, to avoid this we can disable safety checker.

#avoid all black images, disabling it is easy, you can do this:

pipe.safety_checker = lambda images, clip_input: (images, False)Creating the prompt

prompt = 'orange cat'Generating the image

img = pipe(prompt).images[0]Display the image

img

Saving the result

img.save('result.png')Let’s continue our experimentation.

prompt = 'photograph of orange cat, realistic, full hd'

img = pipe(prompt).images[0]

img

prompt = 'a photograph of orange cat'

img = pipe(prompt).images[0]

img

Generating multiple images

from PIL import Image

def grid_img(imgs, rows=1, cols=3, scale=1):

assert len(imgs) == rows * cols

w, h = imgs[0].size

w, h = int(w * scale), int(h * scale)

grid = Image.new('RGB', size = (cols * w, rows * h))

grid_w, grid_h = grid.size

for i, img in enumerate(imgs):

img = img.resize((w, h), Image.ANTIALIAS)

grid.paste(img, box=(i % cols * w, i // cols * h))

return gridnum_imgs = 3

prompt = 'photograph of orange cat'

imgs = pipe(prompt, num_images_per_prompt=num_imgs).images

grid = grid_img(imgs, rows=1, cols=3, scale=0.75)

grid

Parameters

There are some parameters we can set

Seed

We can set seed if we want to generate similar images.

seed = 2000

generator = torch.Generator('cuda').manual_seed(seed)

img = pipe(prompt, generator=generator).images[0]

img

prompt = "photograph of orange cat"

seed = 2000

generator = torch.Generator("cuda").manual_seed(seed)

imgs = pipe(prompt, num_images_per_prompt=num_imgs, generator=generator).images

grid = grid_img(imgs, rows=1, cols=3, scale=0.75)

grid

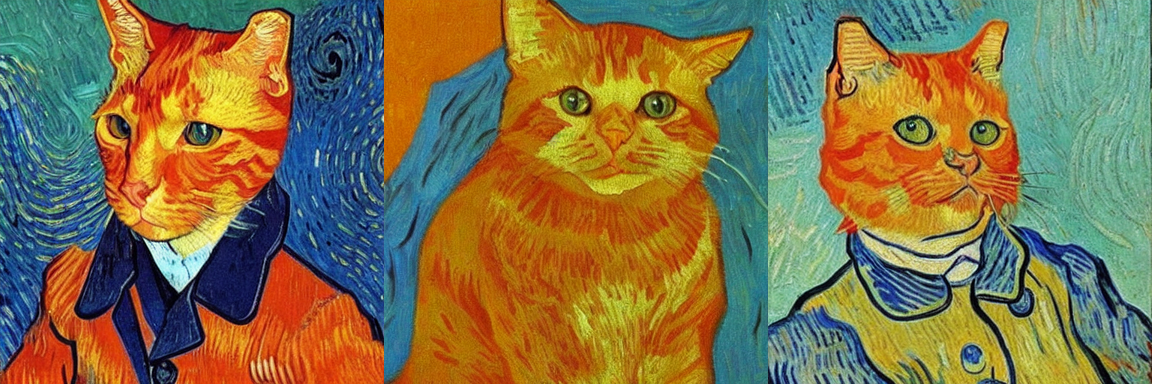

prompt = "van gogh painting of an orange cat"

generator = torch.Generator("cuda").manual_seed(seed)

imgs = pipe(prompt, num_images_per_prompt=num_imgs, generator=generator).images

grid = grid_img(imgs, rows=1, cols=3, scale=0.75)

grid

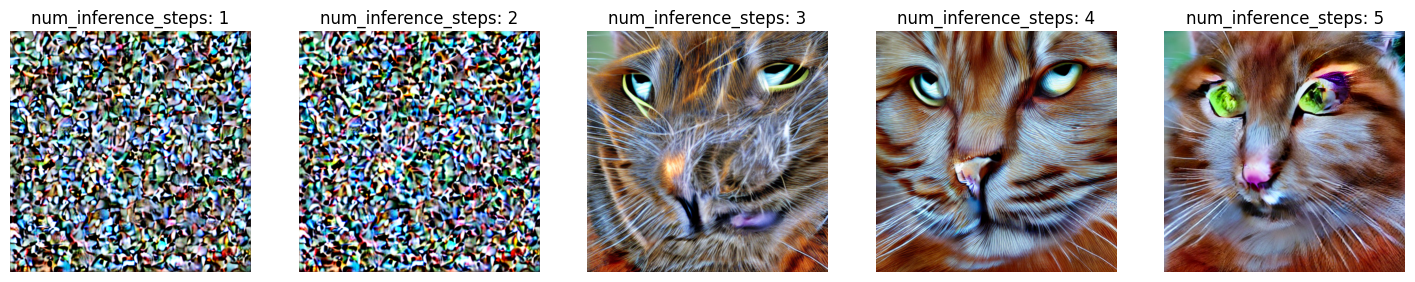

Inference steps

Inference steps refer to the number of denoising steps to reach the final image. The default number of inference steps of 50. If you want faster results you can use a smaller number. If you want potentially higher quality results, you can use larger numbers.

Let’s try out running the pipeline with less denoising steps.

prompt = "photograph of orange cat, realistic, full hd"

generator = torch.Generator("cuda").manual_seed(seed)

img = pipe(prompt, num_inference_steps=3, generator=generator).images[0]

img

import matplotlib.pyplot as plt

plt.figure(figsize=(18,8))

for i in range(1, 6):

n_steps = i * 1

#print(n_steps)

generator = torch.Generator('cuda').manual_seed(seed)

img = pipe(prompt, num_inference_steps=n_steps, generator=generator).images[0]

plt.subplot(1, 5, i)

plt.title('num_inference_steps: {}'.format(n_steps))

plt.imshow(img)

plt.axis('off')

plt.show()

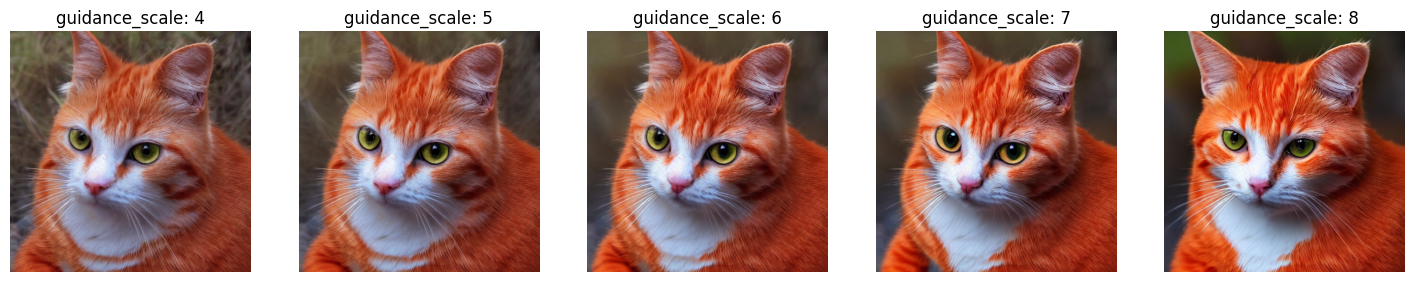

Guidance Scale (CFG / Strength)

CFG stands for Classifier-Free Guidance, so CFG scale can be referred to as Classifier-Free Guidance scale.

So, before 2022, there was a method called classifier guidance. It’s a method that can balance between mode coverage and sample quality in diffusion models after training, similar to low-temperature sampling or truncation in other generative models. Essentially, classifier guidance is a mix between the score estimate from the diffusion model and the gradient from the image classifier. However, if we want to use it, we have to train an image classifier that’s different from the diffusion model.

Then, a question arises, can we have guidance without a classifier?

In 2022, Jonathan Ho and Tim Salimans from Google Brain demonstrated that we can use a pure generative model without a classifier. The title of their paper is “Classifier-Free Guidance”. They train both conditional and unconditional diffusion models together, then they combine the score estimates from both to achieve a trade-off between sample quality and diversity, similar to using classifier guidance.

It’s this CFG that Stable Diffusion uses to balance between the prompt and the Stable Diffusion model. If the CFG Scale is low, the image won’t follow the prompt. But if the CFG Scale is high, the result will be a random colorful image that doesn’t resemble the prompt at all.

The most suitable choice for CFG Scale is between 6.0 - 15.0. Lower values are good for photorealistic images, while higher values are suitable for a more artistic style.

prompt = "a man sit in front of the door"

generator = torch.Generator("cuda").manual_seed(seed)

img = pipe(prompt, guidance_scale=7, generator=generator).images[0]

img

plt.figure(figsize=(18,8))

for i in range(1, 6):

n_guidance = i + 3

generator = torch.Generator("cuda").manual_seed(seed)

img = pipe(prompt, guidance_scale=n_guidance, generator=generator).images[0]

plt.subplot(1,5,i)

plt.title('guidance_scale: {}'.format(n_guidance))

plt.imshow(img)

plt.axis('off')

plt.show()

Image size (dimensions)

The generated images are 512 x 512 pixels

Recommendations in case you want other dimensions:

- make sure the height and width are multiples of 8

- less than 512 will result in lower quality images

- exceeding 512 in both directions (width and height) will repeat areas of the image (“global coherence” is lost)

Landscape mode

seed = 777

prompt = "photograph of orange cat"

generator = torch.Generator("cuda").manual_seed(seed)

h, w = 512, 512

img = pipe(prompt, height=h, width=w, generator=generator).images[0]

img

Portrait mode

generator = torch.Generator("cuda").manual_seed(seed)

h, w = 768, 512

img = pipe(prompt, height=h, width=w, generator=generator).images[0]

img

Negative prompt

We can use negative prompt to tell Stable Diffusion things we don’t want in our image.

num_images = 3

prompt = 'photograph of old car'

neg_prompt = 'black white'

imgs = pipe(prompt, negative_prompt = neg_prompt, num_images_per_prompt=num_images).images

grid = grid_img(imgs, rows = 1, cols = 3, scale=0.75)

grid

Other models

SD v1.5

sd15 = StableDiffusionPipeline.from_pretrained("runwayml/stable-diffusion-v1-5", torch_dtype=torch.float16)

sd15 = sd15.to('cuda')

sd15.enable_attention_slicing()

sd15.enable_xformers_memory_efficient_attention()num_imgs = 3

prompt = "photograph of an old car"

neg_prompt = 'black white'

imgs = sd15(prompt, negative_prompt=neg_prompt, num_images_per_prompt=num_imgs).images

grid = grid_img(imgs, rows=1, cols=3, scale=0.75)

grid

prompt = "photo of a futuristic city on another planet, realistic, full hd"

neg_prompt = 'buildings'

imgs = sd15(prompt, negative_prompt = neg_prompt, num_images_per_prompt=num_imgs).images

grid = grid_img(imgs, rows=1, cols=3, scale=0.75)

gridSD v2.x

sd2 = StableDiffusionPipeline.from_pretrained("stabilityai/stable-diffusion-2-1", torch_dtype=torch.float16)

sd2 = sd2.to("cuda")

sd2.enable_attention_slicing()

sd2.enable_xformers_memory_efficient_attention()prompt = "photograph of an old car"

neg_prompt = 'black white'

imgs = sd2(prompt, negative_prompt=neg_prompt, num_images_per_prompt=num_imgs).images

grid = grid_img(imgs, rows=1, cols=3, scale=0.75)

grid

Fine-tuned models with specific styles

Mo-di-diffusion (Modern Disney style)

https://huggingface.co/nitrosocke/mo-di-diffusion

modi = StableDiffusionPipeline.from_pretrained("nitrosocke/mo-di-diffusion", torch_dtype=torch.float16)

modi = modi.to("cuda")

modi.enable_attention_slicing()

modi.enable_xformers_memory_efficient_attention()prompt = "a photograph of an astronaut riding a horse, modern disney style"

seed = 777

generator = torch.Generator("cuda").manual_seed(seed)

imgs = modi(prompt, generator=generator, num_images_per_prompt=num_imgs).images

grid = grid_img(imgs, rows=1, cols=3, scale=0.75)

gridprompt = "orange cat, modern disney style"

generator = torch.Generator("cuda").manual_seed(seed)

imgs = modi(prompt, generator=generator, num_images_per_prompt=3).images

grid = grid_img(imgs, rows=1, cols=3, scale=0.5)

gridprompt = ["albert einstein, modern disney style",

"modern disney style old chevette driving in the desert, golden hour",

"modern disney style delorean"]

seed = 777

print("Seed: ".format(str(seed)))

generator = torch.Generator("cuda").manual_seed(seed)

imgs = modi(prompt, generator=generator).images

grid = grid_img(imgs, rows=1, cols=3, scale=0.75)

gridOther models

Classic Disney Style - https://huggingface.co/nitrosocke/classic-anim-diffusion

High resolution 3D animation - https://huggingface.co/nitrosocke/redshift-diffusion

Futuristic images - https://huggingface.co/nitrosocke/Future-Diffusion

Other animation styles:

https://huggingface.co/nitrosocke/Ghibli-Diffusion

https://huggingface.co/nitrosocke/spider-verse-diffusion

more models https://huggingface.co/models?other=stable-diffusion-diffusers

Changing the scheduler (sampler)

We can also change the scheduler for our Stable Diffusion.

- Available schedulers: https://huggingface.co/docs/diffusers/using-diffusers/schedulers#schedulers-summary

Default is PNDMScheduler.

sd15 = StableDiffusionPipeline.from_pretrained("runwayml/stable-diffusion-v1-5", torch_dtype=torch.float16)

sd15 = sd15.to("cuda")

sd15.enable_attention_slicing()

sd15.enable_xformers_memory_efficient_attention()sd15.schedulerseed = 777

prompt = "a photo of a orange cat wearing sunglasses, on the beach, ocean in the background"

generator = torch.Generator('cuda').manual_seed(seed)

img = sd15(prompt, generator=generator).images[0]

imgsd15.scheduler.compatiblessd15.scheduler.configfrom diffusers import DDIMScheduler

sd15.scheduler = DDIMScheduler.from_config(sd15.scheduler.config)generator = torch.Generator(device = 'cuda').manual_seed(seed)

img = sd15(prompt, generator=generator).images[0]

imgfrom diffusers import LMSDiscreteScheduler

sd15.scheduler = LMSDiscreteScheduler.from_config(sd15.scheduler.config)

generator = torch.Generator(device = 'cuda').manual_seed(seed)

img = sd15(prompt, num_inference_steps = 60, generator=generator).images[0]

imgfrom diffusers import EulerAncestralDiscreteScheduler

sd15.scheduler = EulerAncestralDiscreteScheduler.from_config(pipe.scheduler.config)

generator = torch.Generator(device="cuda").manual_seed(seed)

img = sd15(prompt, generator=generator, num_inference_steps=50).images[0]

imgfrom diffusers import EulerDiscreteScheduler

sd15.scheduler = EulerDiscreteScheduler.from_config(pipe.scheduler.config)

generator = torch.Generator(device="cuda").manual_seed(seed)

img = sd15(prompt, generator=generator, num_inference_steps=50).images[0]

img