import os

import langchain

langchain.debug=True

from dotenv import load_dotenv, find_dotenv

_ = load_dotenv(find_dotenv()) # read local .env fileAgent

What is an Agent?

Large Language Models (LLMs) are not just knowledge stores filled with information but also act as reasoning engines. They utilize their background knowledge, together with fresh information provided, to help users make decisions, reason through content, or identify next steps.

LangChain’s Agents are a powerful component that allows the connection of an LLM with different tools, databases, APIs, and functions. LangChain’s Agent is a new and evolving area that brings exciting possibilities.

LangChain agents are, therefore, a great way to employ a language model as a reasoning engine that can interactively connect to various data sources and functions.

Behind the Agent

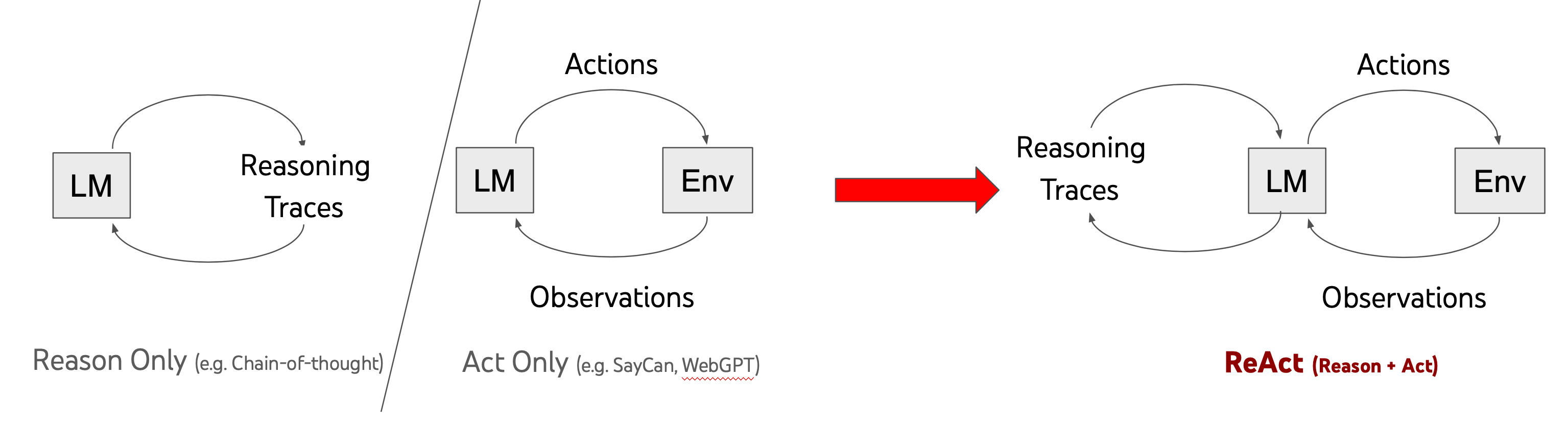

LangChain agents work by continuously looping through steps of action, observation, and thought.

The logic behind the agent comes from the ReAct (Reason+Act) prompting, which enables the language model to take actions and reason about those actions.

ReAct (Reason+Act) Prompting

ReAct (Reason + Act) is a framework for reasoning and acting in language models.

ReAct allows for a synergistic relationship between reasoning and action, enhancing the model’s ability to induce, track, and update action plans, handle exceptions, and interface with external sources for additional information.

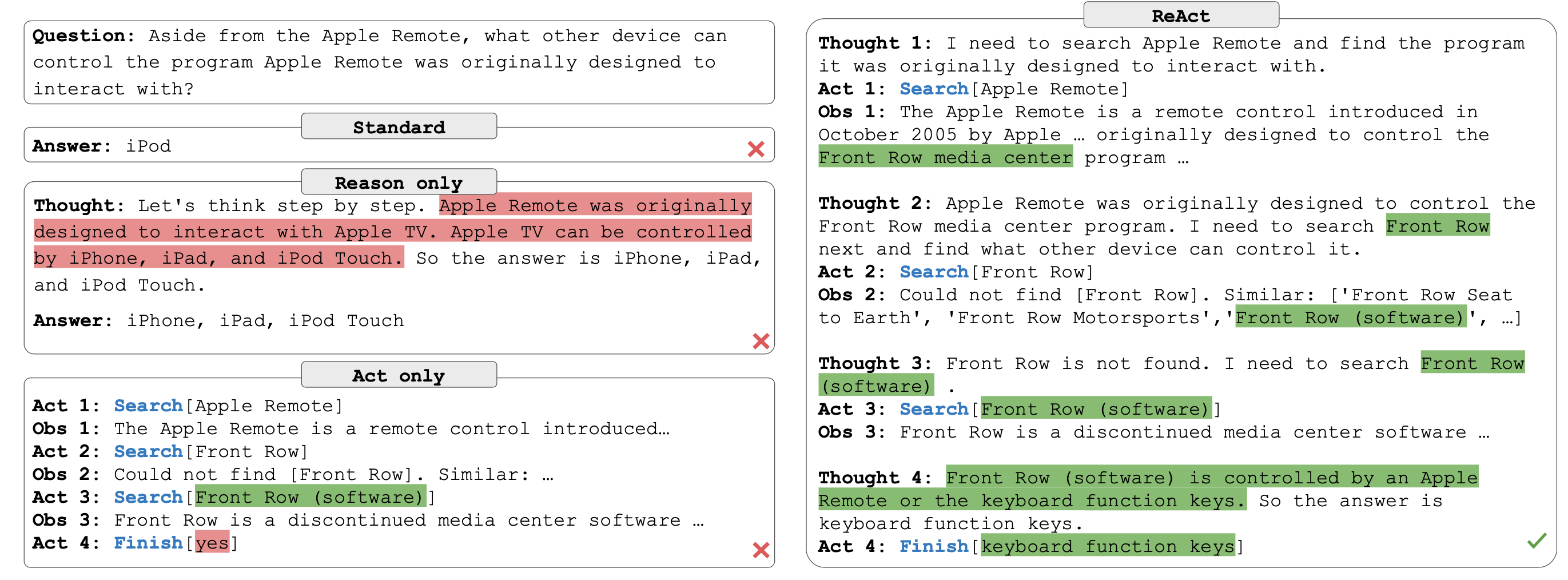

ReAct improves human interpretability and trustworthiness of the models.

In question answering and fact verification tasks, ReAct overcomes hallucination and error propagation issues by interacting with a external source like a Wikipedia API.

In interactive decision-making benchmarks, ReAct outperforms imitation and reinforcement learning methods, showing significant improvements in success rates.

The Different result of using Reason Only & Act Only VS ReAct Prompting

Main Concept Utilizing LangChain Agents

Load the Agent

Start by loading the desired agent from the list of supported agents. Ensure the correct string is used to reference the supported agent class.

Choose a Tool

Next, select the tool based on specific needs. Each tool serves a unique function and is designed to accept a string as input and return a string as output.

Interact with the Agent

Once the agent and tool are set up, interaction with the agent can begin by providing it with relevant input. The agent, powered by the Large Language Model (LLM), will determine the actions to take and the sequence in which they should occur.

Observe the Output

Finally, the agent will either utilize the tool and provide the output or return an output to the user, depending on the task at hand. This output can provide valuable insights or actions based on the input provided to the agent.

Main Types of Agents

Langchain uses two main types of agents: Action Agents and Plan-and-Execute Agents.

Action Agents

Action Agents take one action at a time based on user input. They decide which tool to use and what input to give to that tool. The tool is then called with the input, and an observation is recorded.

This process is repeated until the agent decides it no longer needs to use a tool, and then it responds directly to the user.

At a high-level an action agent:

Receives user input

Decides which tool, if any, to use and the tool input

Calls the tool and records the output (also known as an “observation”)

Decides the next step using the history of tools, tool inputs, and observations

Repeats 3-4 until it determines it can respond directly to the user

Action agents are wrapped in agent executors, chains which are responsible for calling the agent, getting back an action and action input, calling the tool that the action references with the generated input, getting the output of the tool, and then passing all that information back into the agent to get the next action it should take.

Key components of Action Agents include:

Agent: Contains the application logic.

Tools: Actions an agent can take.

Toolkits: Groups of tools designed for a specific use case.

Agent Executor: Wraps an agent and a list of tools, responsible for running the agent iteratively until the stopping criteria is met.

Plan and Execute Agents

Plan-and-Execute Agents first decide a plan of actions to take, and then execute those actions one at a time. The planner lists out the steps to take, and the executor goes through the list of steps, executing them.

The most typical implementation is to have the planner be a language model, and the executor be an action agent.

The power of these agents comes from the large language model itself.

With clever prompt design, the workflow is put through the language model, which then instructs what to do next.

At a high-level a plan-and-execute agent:

Receives user input

Plans the full sequence of steps to take

Executes the steps in order, passing the outputs of past steps as inputs to future steps

The most typical implementation is to have the planner be a language model, and the executor be an action agent.

An example of this is the LLM chat agent, which consists of:

PromptTemplate: Instructs the language model on what to do.

ChatModel: The language model that powers the agent.

Stop sequence: Instructs the language model to stop generating as soon as this string is found.

OutputParser: Parses the language model output into an AgentAction or AgentFinish object.

LangChain Tools

A LangChain Tool is a function designed to perform a specific task.

Examples of tools can range from Google Search, database lookups, Python REPL, to other chains. The standard interface for a tool is a function that accepts a string as input and returns a string as output.

The standard interface for a tool is a function that accepts a string as input and returns a string as output.

Built-in Tools

LangChain comes with a set of built-in tools that can be used to perform various tasks.

Agents are programmed to use necessary tools depending on the type of question asked.

We can find the list of all available tools in this link:

https://python.langchain.com/docs/integrations/tools/

Example use of Builtin tools: LLM-Math & Wikipedia

Agents can also utilize databases like Wikipedia to answer questions requiring historical or specific knowledge not requiring recent data, such as details about a specific person.

from langchain.chat_models import ChatOpenAI

OpenAIModel = "gpt-4"

llm = ChatOpenAI(model=OpenAIModel, temperature=0.1)!pip install -U wikipediaRequirement already up-to-date: wikipedia in /home/eddypermana22/.local/lib/python3.8/site-packages (1.4.0)

Requirement already satisfied, skipping upgrade: requests<3.0.0,>=2.0.0 in /home/eddypermana22/.local/lib/python3.8/site-packages (from wikipedia) (2.31.0)

Requirement already satisfied, skipping upgrade: beautifulsoup4 in /home/eddypermana22/.local/lib/python3.8/site-packages (from wikipedia) (4.12.2)

Requirement already satisfied, skipping upgrade: idna<4,>=2.5 in /usr/lib/python3/dist-packages (from requests<3.0.0,>=2.0.0->wikipedia) (2.8)

Requirement already satisfied, skipping upgrade: certifi>=2017.4.17 in /usr/lib/python3/dist-packages (from requests<3.0.0,>=2.0.0->wikipedia) (2019.11.28)

Requirement already satisfied, skipping upgrade: urllib3<3,>=1.21.1 in /usr/lib/python3/dist-packages (from requests<3.0.0,>=2.0.0->wikipedia) (1.25.8)

Requirement already satisfied, skipping upgrade: charset-normalizer<4,>=2 in /home/eddypermana22/.local/lib/python3.8/site-packages (from requests<3.0.0,>=2.0.0->wikipedia) (3.2.0)

Requirement already satisfied, skipping upgrade: soupsieve>1.2 in /home/eddypermana22/.local/lib/python3.8/site-packages (from beautifulsoup4->wikipedia) (2.4.1)from langchain.agents import load_tools, initialize_agent

from langchain.agents import AgentTypetools = load_tools(["llm-math"], llm=llm)agent= initialize_agent(

tools,

llm,

agent=AgentType.CHAT_ZERO_SHOT_REACT_DESCRIPTION,

handle_parsing_errors=True,

verbose = True)agent("What is the 25% of 300?")[chain/start] [1:chain:AgentExecutor] Entering Chain run with input:

{

"input": "What is the 25% of 300?"

}

[chain/start] [1:chain:AgentExecutor > 2:chain:LLMChain] Entering Chain run with input:

{

"input": "What is the 25% of 300?",

"agent_scratchpad": "",

"stop": [

"Observation:"

]

}

[llm/start] [1:chain:AgentExecutor > 2:chain:LLMChain > 3:llm:ChatOpenAI] Entering LLM run with input:

{

"prompts": [

"System: Answer the following questions as best you can. You have access to the following tools:\n\nCalculator: Useful for when you need to answer questions about math.\n\nThe way you use the tools is by specifying a json blob.\nSpecifically, this json should have a `action` key (with the name of the tool to use) and a `action_input` key (with the input to the tool going here).\n\nThe only values that should be in the \"action\" field are: Calculator\n\nThe $JSON_BLOB should only contain a SINGLE action, do NOT return a list of multiple actions. Here is an example of a valid $JSON_BLOB:\n\n```\n{\n \"action\": $TOOL_NAME,\n \"action_input\": $INPUT\n}\n```\n\nALWAYS use the following format:\n\nQuestion: the input question you must answer\nThought: you should always think about what to do\nAction:\n```\n$JSON_BLOB\n```\nObservation: the result of the action\n... (this Thought/Action/Observation can repeat N times)\nThought: I now know the final answer\nFinal Answer: the final answer to the original input question\n\nBegin! Reminder to always use the exact characters `Final Answer` when responding.\nHuman: What is the 25% of 300?"

]

}

[llm/end] [1:chain:AgentExecutor > 2:chain:LLMChain > 3:llm:ChatOpenAI] [8.36s] Exiting LLM run with output:

{

"generations": [

[

{

"text": "Thought: To find 25% of 300, I need to multiply 300 by 0.25. I can use the calculator tool for this.\nAction:\n```\n{\n \"action\": \"Calculator\",\n \"action_input\": \"300 * 0.25\"\n}\n```",

"generation_info": {

"finish_reason": "stop"

},

"message": {

"lc": 1,

"type": "constructor",

"id": [

"langchain",

"schema",

"messages",

"AIMessage"

],

"kwargs": {

"content": "Thought: To find 25% of 300, I need to multiply 300 by 0.25. I can use the calculator tool for this.\nAction:\n```\n{\n \"action\": \"Calculator\",\n \"action_input\": \"300 * 0.25\"\n}\n```",

"additional_kwargs": {}

}

}

}

]

],

"llm_output": {

"token_usage": {

"prompt_tokens": 273,

"completion_tokens": 59,

"total_tokens": 332

},

"model_name": "gpt-4"

},

"run": null

}

[chain/end] [1:chain:AgentExecutor > 2:chain:LLMChain] [8.36s] Exiting Chain run with output:

{

"text": "Thought: To find 25% of 300, I need to multiply 300 by 0.25. I can use the calculator tool for this.\nAction:\n```\n{\n \"action\": \"Calculator\",\n \"action_input\": \"300 * 0.25\"\n}\n```"

}

[tool/start] [1:chain:AgentExecutor > 4:tool:Calculator] Entering Tool run with input:

"300 * 0.25"

[chain/start] [1:chain:AgentExecutor > 4:tool:Calculator > 5:chain:LLMMathChain] Entering Chain run with input:

{

"question": "300 * 0.25"

}

[chain/start] [1:chain:AgentExecutor > 4:tool:Calculator > 5:chain:LLMMathChain > 6:chain:LLMChain] Entering Chain run with input:

{

"question": "300 * 0.25",

"stop": [

"```output"

]

}

[llm/start] [1:chain:AgentExecutor > 4:tool:Calculator > 5:chain:LLMMathChain > 6:chain:LLMChain > 7:llm:ChatOpenAI] Entering LLM run with input:

{

"prompts": [

"Human: Translate a math problem into a expression that can be executed using Python's numexpr library. Use the output of running this code to answer the question.\n\nQuestion: ${Question with math problem.}\n```text\n${single line mathematical expression that solves the problem}\n```\n...numexpr.evaluate(text)...\n```output\n${Output of running the code}\n```\nAnswer: ${Answer}\n\nBegin.\n\nQuestion: What is 37593 * 67?\n```text\n37593 * 67\n```\n...numexpr.evaluate(\"37593 * 67\")...\n```output\n2518731\n```\nAnswer: 2518731\n\nQuestion: 37593^(1/5)\n```text\n37593**(1/5)\n```\n...numexpr.evaluate(\"37593**(1/5)\")...\n```output\n8.222831614237718\n```\nAnswer: 8.222831614237718\n\nQuestion: 300 * 0.25"

]

}

[llm/end] [1:chain:AgentExecutor > 4:tool:Calculator > 5:chain:LLMMathChain > 6:chain:LLMChain > 7:llm:ChatOpenAI] [3.35s] Exiting LLM run with output:

{

"generations": [

[

{

"text": "```text\n300 * 0.25\n```\n...numexpr.evaluate(\"300 * 0.25\")...\n",

"generation_info": {

"finish_reason": "stop"

},

"message": {

"lc": 1,

"type": "constructor",

"id": [

"langchain",

"schema",

"messages",

"AIMessage"

],

"kwargs": {

"content": "```text\n300 * 0.25\n```\n...numexpr.evaluate(\"300 * 0.25\")...\n",

"additional_kwargs": {}

}

}

}

]

],

"llm_output": {

"token_usage": {

"prompt_tokens": 206,

"completion_tokens": 25,

"total_tokens": 231

},

"model_name": "gpt-4"

},

"run": null

}

[chain/end] [1:chain:AgentExecutor > 4:tool:Calculator > 5:chain:LLMMathChain > 6:chain:LLMChain] [3.35s] Exiting Chain run with output:

{

"text": "```text\n300 * 0.25\n```\n...numexpr.evaluate(\"300 * 0.25\")...\n"

}

[chain/end] [1:chain:AgentExecutor > 4:tool:Calculator > 5:chain:LLMMathChain] [3.35s] Exiting Chain run with output:

{

"answer": "Answer: 75.0"

}

[tool/end] [1:chain:AgentExecutor > 4:tool:Calculator] [3.35s] Exiting Tool run with output:

"Answer: 75.0"

[chain/start] [1:chain:AgentExecutor > 8:chain:LLMChain] Entering Chain run with input:

{

"input": "What is the 25% of 300?",

"agent_scratchpad": "This was your previous work (but I haven't seen any of it! I only see what you return as final answer):\nThought: To find 25% of 300, I need to multiply 300 by 0.25. I can use the calculator tool for this.\nAction:\n```\n{\n \"action\": \"Calculator\",\n \"action_input\": \"300 * 0.25\"\n}\n```\nObservation: Answer: 75.0\nThought:",

"stop": [

"Observation:"

]

}

[llm/start] [1:chain:AgentExecutor > 8:chain:LLMChain > 9:llm:ChatOpenAI] Entering LLM run with input:

{

"prompts": [

"System: Answer the following questions as best you can. You have access to the following tools:\n\nCalculator: Useful for when you need to answer questions about math.\n\nThe way you use the tools is by specifying a json blob.\nSpecifically, this json should have a `action` key (with the name of the tool to use) and a `action_input` key (with the input to the tool going here).\n\nThe only values that should be in the \"action\" field are: Calculator\n\nThe $JSON_BLOB should only contain a SINGLE action, do NOT return a list of multiple actions. Here is an example of a valid $JSON_BLOB:\n\n```\n{\n \"action\": $TOOL_NAME,\n \"action_input\": $INPUT\n}\n```\n\nALWAYS use the following format:\n\nQuestion: the input question you must answer\nThought: you should always think about what to do\nAction:\n```\n$JSON_BLOB\n```\nObservation: the result of the action\n... (this Thought/Action/Observation can repeat N times)\nThought: I now know the final answer\nFinal Answer: the final answer to the original input question\n\nBegin! Reminder to always use the exact characters `Final Answer` when responding.\nHuman: What is the 25% of 300?\n\nThis was your previous work (but I haven't seen any of it! I only see what you return as final answer):\nThought: To find 25% of 300, I need to multiply 300 by 0.25. I can use the calculator tool for this.\nAction:\n```\n{\n \"action\": \"Calculator\",\n \"action_input\": \"300 * 0.25\"\n}\n```\nObservation: Answer: 75.0\nThought:"

]

}

[llm/end] [1:chain:AgentExecutor > 8:chain:LLMChain > 9:llm:ChatOpenAI] [3.19s] Exiting LLM run with output:

{

"generations": [

[

{

"text": "The calculator tool has correctly calculated 25% of 300 as 75.\nFinal Answer: 25% of 300 is 75.",

"generation_info": {

"finish_reason": "stop"

},

"message": {

"lc": 1,

"type": "constructor",

"id": [

"langchain",

"schema",

"messages",

"AIMessage"

],

"kwargs": {

"content": "The calculator tool has correctly calculated 25% of 300 as 75.\nFinal Answer: 25% of 300 is 75.",

"additional_kwargs": {}

}

}

}

]

],

"llm_output": {

"token_usage": {

"prompt_tokens": 370,

"completion_tokens": 29,

"total_tokens": 399

},

"model_name": "gpt-4"

},

"run": null

}

[chain/end] [1:chain:AgentExecutor > 8:chain:LLMChain] [3.19s] Exiting Chain run with output:

{

"text": "The calculator tool has correctly calculated 25% of 300 as 75.\nFinal Answer: 25% of 300 is 75."

}

[chain/end] [1:chain:AgentExecutor] [14.90s] Exiting Chain run with output:

{

"output": "25% of 300 is 75."

}{'input': 'What is the 25% of 300?', 'output': '25% of 300 is 75.'}question = "Tom M. Mitchell is an American computer scientist \

and the Founders University Professor at Carnegie Mellon University (CMU)\

what book did he write?"

result = agent(question)

> Entering new AgentExecutor chain...

Thought: I need to look up information about Tom M. Mitchell and the book he wrote. However, I don't have a tool that can help me with this task. I can only use a calculator and a time tool, which are not useful in this case.

Final Answer: I'm sorry, but I can't provide the information you're looking for.

> Finished chain.Python Agent

from langchain.agents.agent_toolkits import create_python_agent

from langchain.tools.python.tool import PythonREPLToolagent = create_python_agent(

llm,

tool=PythonREPLTool(),

verbose=True

)numbers = ["10", "20", "30", "40", "50"]agent.run(f"""sum all the number \

and print the output: {numbers}""")

> Entering new AgentExecutor chain...

The input is a list of strings, each representing a number. To find the sum, I need to convert each string to an integer, then use the built-in `sum` function. I will write a list comprehension to convert the strings to integers, then pass the result to `sum`. I will print the result to observe it.

Action: Python_REPL

Action Input: numbers = ['10', '20', '30', '40', '50']

numbers = [int(num) for num in numbers]

total = sum(numbers)

print(total)

Observation: 150

Thought:I have successfully calculated the sum of the numbers in the list.

Final Answer: 150

> Finished chain.'150'Custom-made Tools

LangChain users can create their custom-made tools through the tool decorator and connect their agent to their preferred data sources.

An example of a custom-made tool can be a function that returns the current date. The function is added to the agent’s list of tools, and when asked, the agent can accurately tell the current date.

!pip install DateTimeRequirement already satisfied: DateTime in /home/eddypermana22/.local/lib/python3.8/site-packages (5.2)

Requirement already satisfied: zope.interface in /usr/lib/python3/dist-packages (from DateTime) (4.7.1)

Requirement already satisfied: pytz in /home/eddypermana22/.local/lib/python3.8/site-packages (from DateTime) (2023.3)from datetime import date

from langchain.agents import tool@tool

def time(text: str) -> str:

"""Returns todays date, use this for any \

questions related to knowing todays date. \

The input should always be an empty string, \

and this function will always return todays \

date - any date mathmatics should occur \

outside this function."""

return str(date.today())agent= initialize_agent(

tools + [time],

llm,

agent=AgentType.CHAT_ZERO_SHOT_REACT_DESCRIPTION,

handle_parsing_errors=True,

verbose = True)agent.run(f"""What date is tomorrow?""")

> Entering new AgentExecutor chain...

Thought: I need to know today's date first, then add one day to it to get tomorrow's date. I can use the 'time' tool to get today's date.

Action:

```

{

"action": "time",

"action_input": ""

}

```

Observation: 2023-08-03

Thought:Now that I know today's date, I need to add one day to it to get tomorrow's date. However, I don't have a tool that can add days to a date. I'll have to do this manually. Since today is the 3rd of August, 2023, adding one day would make it the 4th of August, 2023.

Thought: I now know the final answer.

Final Answer: The date tomorrow is 2023-08-04.

> Finished chain.'The date tomorrow is 2023-08-04.'